This project is the culmination of our work in the Robotics course, undertaken by a team of four members. Our focus revolves around developing a mobile platform equipped with an RGB-D camera and lidar to aid people in locating their lost belongings within confined spaces. The system also comes with an intuitive GUI and a command prompt for user to interact with.

Introduction

SEARCHING is important!! According to statistics, we dedicate nearly 5,000 hours of our lives to finding things within our homes alone.

This number is likely to increase as our activities extend beyond our homes.

Remarkably, current commercial products fail to autonomously assist users in locating their belongings.

To address this gap, our project introduces a robot designed specifically for this purpose.

Our aim is to enhance the quality of daily living by offering an autonomous solution to the challenge of finding lost items.

Approaches

Hardware Architecture

The whole system is based on ROS and is composed of two machine: a rover and a remote computer.> 1.Rover

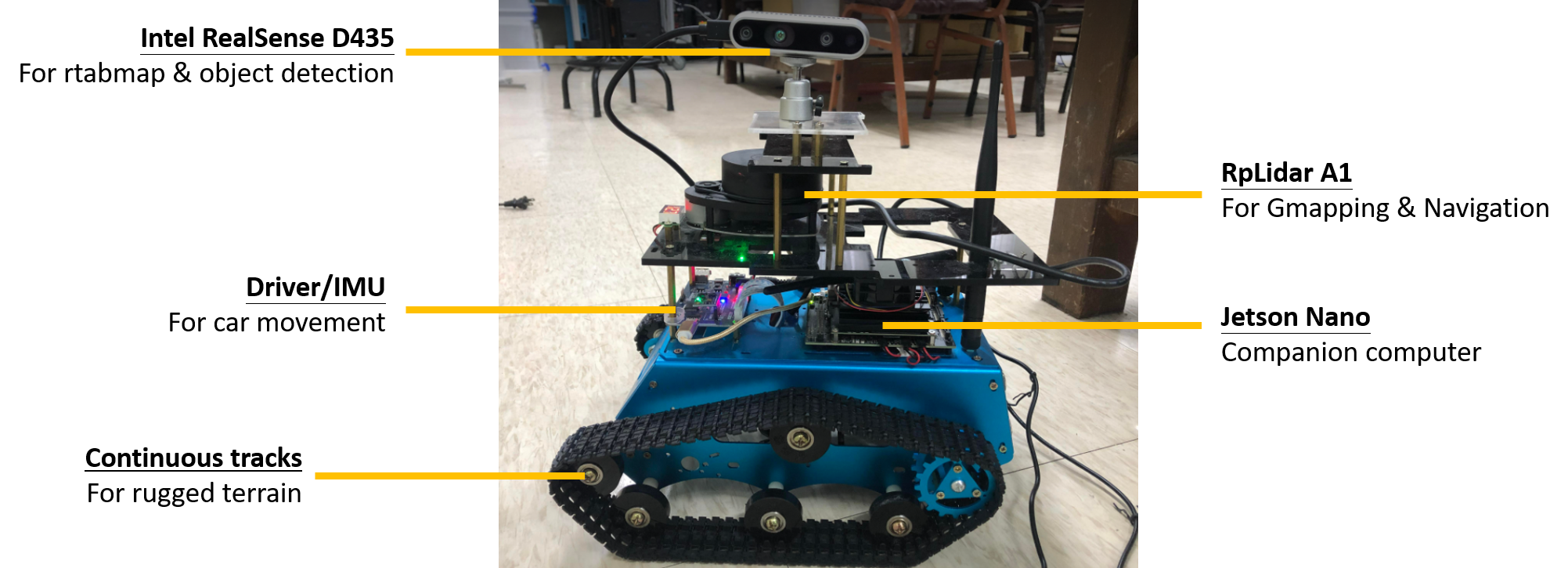

The following figure 1. is the hardware architecture of the robot:

Figure 1. Hardware architecture

Jetson Nano is the companion computer of the rover which deals with different signals from sensors. Multiple sensors were equipped on the rover. The RpLidar is a 2D, 360 degree lidar with a detecting range of 0.15 ~ 6 meters. A motor driver along with an IMU is equipped in the front of the rover. An RGB-D camera is also equipped on top of the rover to provide vision information.

> 2.Remote Computer

The remote computer operates on Ubuntu, complemented by ROS. Its primary responsibility lies in initializing the rover and provide GUI for users to locate objects. To operate real-time monitoring of the robot's status and environment, Rviz is running on the remote computer employs, providing visualizations of the TF tree and the constructed map. This visualization serves as a crucial role of examing the robot's movements and the evolving spatial awareness during its operations.

Software Architecture

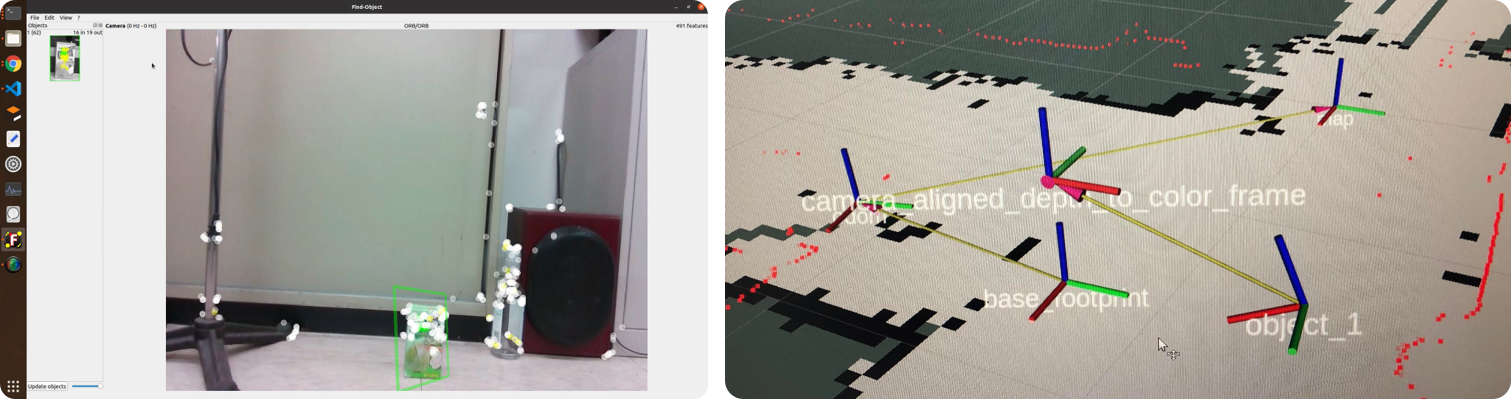

Figure 2. Screenshot of the remote computer. The left figure is the find object GUI using Find_object_2d. The right figure is the TF tree and constructed map displayed in Rviz.

> 1.Mapping

To build a map for rover to navigate, SLAM is the key to accomplish the task. In our system, both lidar-based SLAM system or visual-based SLAM system may be a solution. Realsense camera is a RGB-D camera that is capable of providing both RGB image and depth information for visual SLAM system. The vSLAM system we originally used is RTAB-Map. RTAB-Map is a vSLAM algorithm which support RGB-D camera, Stereo camera and Lidar-based SLAM. However, due to the limiting power of the companion computer, RTAB-Map could not recieve as good result as running on a PC. Thus, GMapping is an alternative for us to finish the task. RpLidar is introduced and is then responsible for the mapping task.

> 2.Navigation

After building a map with SLMA approaches, the move_base package is then be responsible for the navigation of the rover. The move_base package enable us to move a robot to desired positions by setting goal points. These goal points could be recieved with the help of the object detection system and SLAM system. We combine the two system together so that if a target object was detected, the current position and pose of the rover would be recorded. Users may now enter the desired target and the rover can move to the destination with the help of the move_base package.

> 3.Object Detection

For object detection, we are using Find_object_2d. Find_object_2d is an integrated ROS package with various feature detectors and descriptors to choose. The package also provide a decent GUI for us to implement further functions. During the developping step, we had also tried YOLO as our detector. YOLO is a state-of-the-art, real-time object detection system. It is known for its speed and accuracy. However, due to the lack of enough dataset of target objects, we were not able to obtain a satisfied accuracy and eventually dismissed the usage of YOLO.

Results

The goal of our robot can be divided into three stages: manually mapping using Gmapping, automatically object location searching and user guiding with automatic navigation.

> Demo Video

References

| - GMapping | : http://wiki.ros.org/gmapping |

| - move_base | : http://wiki.ros.org/move_base |

| - Find_object_2d | : http://wiki.ros.org/find_object_2d |

> [Note] For full code, please visit: https://github.com/htliang517/MobileLostAndFound-MLF6110